4.2.2. Introduction to Probability#

4.2.2.1. Motivation#

We have seen that the concepts of statistical Thermodynamics, such as the Boltzmann factor and the partition function, require the use of probability. These notes will introduce some fundamental concepts of discrete and continuous probability that we have/will use.

4.2.2.2. Learning Goals:#

After using these notes (/attending lecture), students should be able to:

Plot continuous and discrete probability densities of one variable.

Normalize continuous and discrete probability densities.

Compute average quantities from probability densities.

4.2.2.3. Coding Concepts#

The following coding concepts are used in this notebook:

4.2.2.4. Discrete Probability#

Discrete probability or the probability of discrete events describes the likelihood of observing events such as flipping a coin or rolling a dice. Consider flipping a coin. We have intuition into this that, assuming the coin is “fair”, we should have

More generally, for a discrete event \(j\) (e.g. getting heads when flipping a coin), we have that

where \(N_j\) is the number of \(j\) events and \(N\) is the total number of events.

For example, if we flipped a coin nine times we might get:

import random # library to generate random integer

def coinToss(number):

recordList, heads, tails = [], 0, 0 # multiple assignment

for i in range(number): # do this 'number' amount of times

flip = random.randint(0, 1)

if (flip == 0):

recordList.append("H")

heads += 1

else:

recordList.append("T")

tails += 1

print(str(recordList))

print("Number of Heads:", heads)

print("Number of Tails:", tails)

coinToss(9)

['H', 'H', 'T', 'T', 'H', 'H', 'H', 'T', 'T']

Number of Heads: 5

Number of Tails: 4

From this data we would say that:

In this case we don’t quite get the expected \(P_{Heads} = P_{Tails} = 0.5\) but that value is only guaranteed from a sample of infinite coin flips.

Some notes on general discrete probabilites. We say that

indicates that event \(j\) will happen with complete certainty. Similarly,

implies that event \(j\) is impossible.

Additionally, the following must be true

and

The first of these statements simply says that the sum over all events of the number of events of each type must be equal to the total number of events. The second states that the probabilities of events must sum to 1. This is equivalent to the normalization condition.

4.2.2.4.1. Computing Average Quantities from Discrete Probability Densities#

If we consider discrete events for which a number if an outcome, we can compute the expected average value of this outcome. Events like this could be rolling a dice or measuring quantized energy levels. In this case, we can compute an average value of the discrete quantity, \(x\), as

where there are a total of \(n\) possible outcomes and the \(j\)th outcome has probability \(P_j\) or \(P_j = P(x_j)\). The last version of \(P\), \(P(x_j)\), introduces the idea that \(P\) is a function of \(x\) that returns the probabilty of observing that outcome. \(P(x)\) is referred to as a probability density.

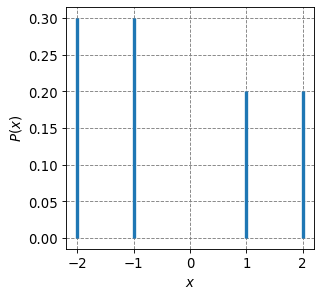

4.2.2.4.2. Example of a Discrete Probability Density#

Consider the following data:

x |

P(x) |

|---|---|

-2 |

0.3 |

-1 |

0.3 |

1 |

0.2 |

2 |

0.2 |

Is \(P\) normalized?

Plot P(x).

Compute \(\langle x \rangle\).

Compute \(\langle x^2 \rangle\).

Is \(P\) normalized? To address this, we compute

Since \(\sum P = 1\), the probability distribution is normalized.

import numpy as np

x = np.array([-2,-1,1,2])

p = np.array([0.3,0.3,0.2,0.2])

norm = np.sum(p)

print(norm)

1.0

Plot P(x). See plot below.

import matplotlib.pyplot as plt

%matplotlib inline

# setup plot parameters

fontsize=12

fig = plt.figure(figsize=(4,4), dpi= 80, facecolor='w', edgecolor='k')

ax = plt.subplot(111)

ax.grid(b=True, which='major', axis='both', color='#808080', linestyle='--')

ax.set_xlabel("$x$",size=fontsize)

ax.set_ylabel("$P(x)$",size=fontsize)

plt.tick_params(axis='both',labelsize=fontsize)

# plot distribution

plt.vlines(x,0,p,lw=3)

<matplotlib.collections.LineCollection at 0x7fc745ad9490>

Compute \(\langle x \rangle\):

(4.37)#\[\begin{eqnarray} \langle x \rangle &=& \sum_{j=1}^4 x_jP(x_j) = (-2)\cdot 0.3 + (-1)\cdot 0.3 + (1)\cdot 0.2 + (2)\cdot 0.2 \\ &=& -0.3 \end{eqnarray}\]

print(np.round(np.sum(p*x),2))

-0.3

Compute \(\langle x^2 \rangle\):

(4.38)#\[\begin{eqnarray} \langle x^2 \rangle &=& \sum_{j=1}^4 x_j^2P(x_j) = (-2)^2\cdot 0.3 + (-1)^2\cdot 0.3 + (1)^2\cdot 0.2 + (2)^2\cdot 0.2 \\ &=& 2.5 \end{eqnarray}\]

print(np.round(np.sum(x**2*p),2))

2.5

4.2.2.5. Continuous Probability#

If \(x\) is a continuous variable, e.g. positions or momenta, then the probability distribution \(P(x)\) will be a continuous probability density. In this case, the sums of discrete values is replaced with an integral over the continuous variable. Take the normalization condition, for example. For a continous probability density \(P(x)\), we have

Note that the limits of integration are from \(-\infty\) to \(\infty\) indicating that \(x\) can take on values anywhere in this domain.

We might be interested in the probability of observing a particular subdomain of \(x\). For example, if we are interested in the probability of observing \(x\) in the domain \(a \leq x \leq b\), we have that

A note on units. \(P(x)dx\) is a unitless quantity. This can be observed by looking at the normaliztion condition. The integral \(\int P(x)dx=1\) is a unitless quantity this \(P(x)dx\) must be unitless. \(dx\) will have the same units as \(x\) since it is the infinitesimal change in \(x\). Thus, \(P(x)\) will have units of inverse, or reciprocal, \(x\).

4.2.2.6. Computing Average Quantities from Continuous Probability Densities#

The average value of \(x\) for a continuous variable is given as

Similarly, the average value of \(x^2\), or the second moment of the distribution, is given as

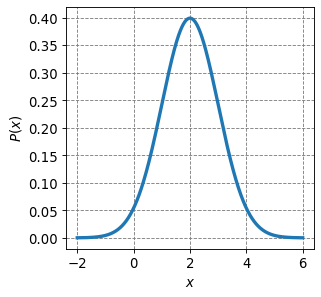

4.2.2.6.1. Example: A Gaussian Distribution in 1D#

A Gaussian distribution is one of the most widely used continuous probability distributions. In 1D, it has the general form

where \(x_0\) is the center/mean of the distribution, \(C\) is the normalization factor, and \(\sigma^2\) is the variance of the distribution (\(\sigma = \sqrt{\sigma^2}\) is the standard deviation). A 1D Gaussian distribution centered at \(x_0=2\) and with a standard deviation of \(\sigma = 1\) is plotted below.

from scipy.stats import norm

# setup plot parameters

fontsize=12

fig = plt.figure(figsize=(4,4), dpi= 80, facecolor='w', edgecolor='k')

ax = plt.subplot(111)

ax.grid(b=True, which='major', axis='both', color='#808080', linestyle='--')

ax.set_xlabel("$x$",size=fontsize)

ax.set_ylabel("$P(x)$",size=fontsize)

plt.tick_params(axis='both',labelsize=fontsize)

# plot distribution

x = np.arange(-2,6,0.0001)

plt.plot(x,norm.pdf(x,loc=2,scale=1),lw=3)

[<matplotlib.lines.Line2D at 0x7fc746834370>]

We need to normalize this function. To do so, we enfore the normalization condition

Plugging in the equation for a Gaussian we get

or

So how to we compute the integral \(\int_{-\infty}^{\infty} e^{-\frac{(x-x_0)^2}{2\sigma^2}}dx\)? We will use integral tables (such as https://en.wikipedia.org/wiki/List_of_definite_integrals). If you look for definite integrals involving exponential functions, you will find the following as the one that most closely resembles the Guassian integral

but note the difference in limits of integration and that the argument of the exponent does not exactly match the Guassian integral above.

Our goal now is to manipulate the Gaussian integral to look like the one from the table. We start by performing some \(u\)-like substituions. Start with \(u = x-x_0\) which implies that \(du = dx\):

where in the second step we made the substitution that \(\alpha = \frac{1}{2\sigma^2}\). Note that we never changed the limits of integration. The \(u\) substitution should require this but since \(\infty - x0 = \infty\) we didn’t have to make any change.

4.2.2.6.2. Even and Odd Functions#

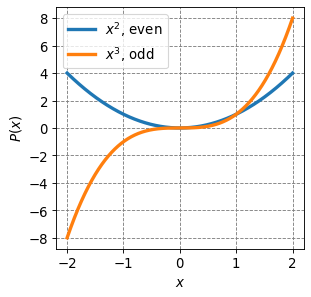

The last manipulation of the Guassian integral that we need to make is to change the limits of integration from \(-\infty \rightarrow \infty\) to \(0 \rightarrow \infty\). We do this by employing the fact that the Guassian function is an even function. Specifcally, \(f(u) = e^{-\alpha u^2}\), is an even function of \(u\). But what is an even function, and by contrast what is an odd function?

An even function has the property that

An odd function has the property that

Examples of even functions include even powers of \(x\), e.g. \(f(x) = x^2\). Examples of odd functions include the odd powers of \(x\), e.g. \(f(x) = x^3\). These two functions are plotted below.

from scipy.stats import norm

# setup plot parameters

fontsize=12

fig = plt.figure(figsize=(4,4), dpi= 80, facecolor='w', edgecolor='k')

ax = plt.subplot(111)

ax.grid(b=True, which='major', axis='both', color='#808080', linestyle='--')

ax.set_xlabel("$x$",size=fontsize)

ax.set_ylabel("$P(x)$",size=fontsize)

plt.tick_params(axis='both',labelsize=fontsize)

# plot distribution

x = np.arange(-2,2,0.0001)

plt.plot(x,x**2,lw=3,label="$x^2$, even")

plt.plot(x,x**3,lw=3,label="$x^3$, odd")

plt.legend(fontsize=fontsize)

<matplotlib.legend.Legend at 0x7fc747fe4700>

Even functions have the property that

We will employ this property for the Gaussian function.

Odd functions have the property that

Now back to the Guassian function, we had that

where \(\alpha = \frac{1}{2\sigma^2}\). Using the fact that \(e^{-\alpha u^2}\) is an even function of \(u\) (demonstrate that to yourself) we have that

Plugging back in for \(\alpha\) we get

or

And finally our normalized Gaussian distribution: